Winter 2024

The AI emperor hath no content management clothes

Michael Iantosca, Avalara, Inc

Making AI smarter with structured content and how DITA can supercharge AI

The phrase “The Emperor has no clothes” originates from “The Emperor’s New Clothes,” a famous short tale by Danish author Hans Christian Andersen. It was first published in 1837 as part of his Fairy Tales Told for Children collection. In the story, swindlers pretend to make an invisible suit of clothes for an emperor. They claim the fabric is invisible to anyone unfit for their position or “hopelessly stupid.” The emperor, not wanting to appear unworthy or foolish, pretends to see the clothes and praises them, as do all his ministers and courtiers. When the emperor parades before the people, everyone goes along with the pretense—until a child in the crowd exclaims, “But he isn’t wearing anything at all!”

I can’t imagine a better metaphor for the state of generative AI solutions and the denial that engulfs them. In this realm, the fabric is content and content management – and the inadequacy thereof.

In today’s fast-paced world of artificial intelligence, everyone’s talking about how to make machines more intelligent and valuable. Generative AI, such as OpenAI’s ChatGPT, grabbed the headlines, but as companies dive into this tech, they hit some bumps. Traditional AI retrieval methods, especially those that rely on Retrieval-Augmented Generation (RAG), aren’t cutting it. The good news? There’s a better way, and it involves using structured content models to power knowledge graphs. Let’s explain how this works and why we think it’s a game-changer.

What’s Wrong with the Old Way?

Let’s start by discussing what’s not working as well as expected. For several years, traditional vector-based RAG models have been the preferred method for retrieving the potentially relevant subset of content before passing it to a large language model (LLM) like ChatGPT to generate more accurate answers than using LLMs alone. However, many companies new to developing generative AI applications are only beginning to discover the limitations of vector-based retrieval models and are beginning to turn to knowledge graphs instead. Traditional vector-based RAG works by breaking content into small chunks—like tearing up a book into individual pages, paragraphs, and words—and then storing those bits and pieces in a separate vector database for retrieval. The technical term for this in the AI development world is chunking. Chunking is necessary due to the limitations of the LLMs. Chunking is far from what we, as content professionals, do when architecting and writing component-based topic-oriented content. When you ask the model a question, it tries to find the right pieces and glue them back together. But here’s the problem:

Losing the Big Picture: When you chop up content into little bits and pieces, you often lose the bigger context and other content on which the correct context depends. It’s like trying to understand a story by reading random paragraphs—things can get confusing fast.

- Guesswork Instead of Fact-Based Reasoning: These models often rely on what amounts to guessing based on learned patterns and associations that produce impressive results—most of the time, and therein lies the key phrase, “most of the time.” Technically, they’re “stochastic,” which is a fancy way of saying they make educated predictive guesses based on mathematical similarity. This can lead to errors, especially when you must have the correct answer. These stochastic models only simulate reasoning; they cannot achieve reliable and explainable deductive reasoning based on a source of truth that provides certainty. Deductive reasoning can only be achieved by adding an external source of curated, validated, and current facts.

- Overfitting: Imagine training for a race on a single track—great for that track, but not so much for others. That’s overfitting in a nutshell. Some engineering teams try to improve these stochastic models by custom-tuning general LLMs. These models can get good at answering specific questions they’ve seen before but struggle with new ones and can no longer generalize, nor does it address the practical challenges and costs posed by significant and frequently changing content sources. How much fine-tuning can be done, and at what rate and cost before it becomes unmanageable, unfeasible, and ineffective?

- Complex and Costly: Engineering teams have to create all sorts of complex workarounds, architectures, and methods to deal with the predictive limitations of these models, making them more complex to manage and more expensive to run, let alone maintain such systems and more importantly, maintaining the content itself. Don’t believe me? Check out the many LinkedIn posts or subscribe to Medium.com to follow the daily avalanche of convoluted vector-based RAG models, with many of them being turn-key-ready commercial solutions that are being promoted, like snake oil.

So, what’s the alternative? Many organizations already have a goldmine of structured, well-organized content thanks to DITA, and they are sitting on a solution that avoids these problems altogether. Our novel, non-proprietary approach based on open standards can also support componentized topics written in other formats, including Markdown, ASCIIDoc, RsT, and other structured and unstructured topic-oriented formats.

Enter DITA-Driven Knowledge Graphs and DOM Graph RAG

DITA, or Darwin Information Typing Architecture, is like a blueprint for organizing complex content. It’s widely used in many industries that need to keep track of lots of technical and non-technical information—think product manuals or extensive documentation. The beauty of DITA is that it’s already structured, making it the perfect match for creating what’s called a knowledge graph.

Figure 1. A visual representation of a DITA Graph

DITA is based on XML. XML provides what we call a hierarchical document object model (DOM), which is why we’ve formally named this model DOM Graph RAG rather than DITA RAG because we leverage the object-oriented-like nature of the DITA architecture that can include DITA and optionally, non-DITA source topics in the resulting graph. We’ve used and highly recommend using the DITA schema and graph technology based on the Resource Description Framework (RDF) as the basis for document-oriented knowledge graphs. Both DITA and RDF are widely-adopted open industry standards that allow for broad interchange. However, similar models can be built using other XML dialects such as J2008, DocBook, or TEI.

A knowledge graph is like a giant, smart structured map that shows how different pieces of information are connected. When you combine DITA with a knowledge graph, you get a system that not only remembers the big picture but also understands how everything relates and fits together.

Here’s Why This Approach Rocks:

- Keeping the Context Intact: Unlike the old method where content is chopped into bits, DITA-driven knowledge graphs keep everything in its proper context. It’s like reading a full chapter instead of random pages—everything makes more sense.

- Smarter Reasoning: Knowledge graphs don’t rely on guesswork like vector-based retrieval models. They use rules and logic along with something called Named Entity Retrieval (NRE), making them much more accurate and reliable, especially when the stakes are high.

- Built to Scale: These graphs and graph databases can manage big, complex queries with deductive reasoning without breaking a sweat. And since DITA content is already organized, it’s easy to scale up as needed.

- Easy to Keep Up to Date: DITA’s structured format makes it simple to manage changes. Whether you’re updating content, retiring old information, or adding new details, the knowledge graph stays current without a lot of hassle. With a vector database, how would anyone reliably unpublish, correctly update, or version content willy-nilly dumped into a vector data store without confusion or conflict? Content change management with current vector-retrieval models is virtually impossible and worse than inadequate. We think it’s a ticking time bomb and a looming disaster for companies betting their business on these models – they just don’t know it yet because surprise (not!), most AI/ML engineering teams still don’t consider content and content management up-front. Why would they? It’s just the docs, right?

How to Build Your Own DITA-Driven Knowledge Graph

So, how do you get started with this better way of managing content for AI? It’s a lot simpler than you might think.

Step 1: Turn a DITAMap into a Smart Map (Ontology)

Start by converting your DITA schema into something called an ontology. This is like creating a blueprint that shows how all your content is connected. Tools like PoolParty can help capture the relationships and types of content in your topic structure.

Figure 2. A Graph Powered Chatbot

Step 2: Build the Knowledge Graph

Next, you’ll want to automate the construction of your knowledge graph. Manually creating and updating knowledge graphs is resource-prohibitive (and that’s the beauty and break-through elegance of this model). This involves transforming your DITA content into RDF (Resource Description Framework) format and loading it into a graph database. This graph database is where all the magic happens—where your content for AI lives and where the AI will retrieve information. You can even connect your current cCMS to continually feed the graph database, just like publishing output to any other channel. You can repeat this process frequently to automate change management. Remember, this model uses the source topic, not baked web pages. You also use the transforms to normalize and resolve things like reused content just like you do when generating output for other output channels.

Step 3: Make the Most of the Graph Database

Choosing the right graph database is key. You’ll want one that can manage large-scale operations and support reasoning and inferencing—the ability to draw conclusions based on the data it has. SPARQL, a query language for RDF databases, lets you dig into your knowledge graph to find and retrieve exactly what you need before feeding the retrieved content to an LLM using it for what it does best – summarize and generate an answer.

Step 4: Add Some Extra Smarts

DITA content can be made even smarter by adding taxonomy labels to maps and topics (think of them as tags that help categorize your content by product, subject, audience, etc.). You can also integrate a domain knowledge model—which is a specialized set of rules and facts related to your specific industry or field. This supercharges your knowledge graph, making it even more effective at understanding and retrieving the right information.

Step 5: Supercharge your Graph

Create and add a concept ontology about your specific business and products into your graph. This is called a domain-specific knowledge model. The concepts and content relationships work together with the content nodes in the graph to find and retrieve exactly the right combination of content to feed to the LLM to generate the right answer with far higher confidence than traditional vector RAG models. You can even use an LLM in what is called a “virtuous cycle” to assist in identifying key concepts and their relationships, but you’ll need to validate, not trust or automate the process as a domain knowledge model is your trusted Ground Truth which becomes your secret competitive weapon, and your most valuable IP in addition to your content.

Tackling the Challenges

Of course, nothing worth doing is ever completely easy. There are a few challenges you might face:

- Keeping the Graph Up to Date: Knowledge graphs need to be regularly updated as new content is created or old content is changed. But with the right tools and automation, this can be pretty straightforward. If you create or maintain a separate digital record of your content inventory you can use that metadata to inform the graph what topics to update, remove, audience, internal vs external, draft or released, version, language – just about anything as graph nodes representing maps and topic can carry and update such metadata.

- Meeting High Expectations: As your system gets more accurate, people will expect perfection. This is known as the “Precision Paradox.” It’s important to keep refining the system to meet these rising expectations. The precision paradox is another problem facing conventional vector-based retrieval models as their highly predictive nature means that they are accuracy and precision-limited. If you need only 80 percent accuracy that might suffice, but most businesses, especially highly regulated ones, need significantly better. Moreover, just imagine the degree of accuracy and reliability that will be required when shops start building and deploying assistive do-with and do-for agentic AI applications which advanced engineering teams are already starting to develop.

- Combining with Other Systems: While knowledge graphs are great, you might still need to combine them with other systems, like vector databases, especially when dealing with massive volumes of monolithic, unstructured content. This hybrid approach gives you the best of both worlds when the tolerance levels for the application permit, but it’s not the preferred approach. Amazingly (not), engineering teams don’t talk to their content teams up front to see if the content doesn’t already use a contextually correct topic information architecture, and equally confounding that they don’t consider converting monolithic unstructured content to topical models, where appropriate.

Figure 3. Knowledge in the Loop.

Wrapping It All Up

Using DITA-driven knowledge graphs for content retrieval in RAG models isn’t just a good idea—we think it’s a game-changer. By keeping content in context, enhancing reasoning, and providing a scalable, efficient system, this approach solves many of the problems that plague traditional vector-based models. For any organization looking to implement generative AI, this method offers a more reliable, accurate, and future-proof solution. It’s not a panacea however and can be combined with other models and methods. The key is using the right model and techniques based on the type and nature of the content, either alone or in combination to deliver the right content, to the right user, in the right experience. So, if you’re ready to take your content retrieval and generative AI to the next level, it’s time to embrace the power of DITA and knowledge graphs.

This new and innovative approach has been several years in the making. We even wrote about it right here in the December 2022 issue of the CIDM Best Practices Newsletter “From Information Wars to Knowledge Wars And why business leaders should care, right now!” and said what was coming and needed. We’ve all intrinsically known that structured content is immensely valuable for AI, but no one had an actual model, architecture, and implementation to prove it. We do now. It was once proclaimed that DITA was getting “long-in-the-tooth” as in outdated by proponents of markdown and an anti-DITA minority. All we can say is “Look at us now!”

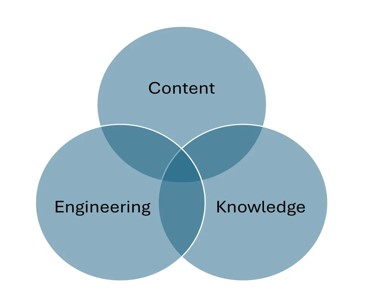

This project wasn’t done as your typical show-and-tell as many “Hey, look at what we did!” leaving everyone in the lurch about how to do it in your shops. To that end, we’ve published a detailed paper at ThinkingDocumentation.com that details a fully implemented DOM Graph RAG model, architecture, and more detail on the steps to recreate yourself. It is “a” model that we’re certain others will improve upon, but we’re confident that together, as a community, we can show the world that generative AI is not just the domain of programmers, but requires-no demands combining intelligent content, knowledge management, and engineering for holistic AI solutions. The common refrain has been “human in the loop.” Let’s change that and educate others that accurate, relevant, flexible, and truly explainable AI (XAI) requires knowledge in the loop.

About the author: Michael Iantosca is the Senior Director of Content Platforms at Avalara Inc. Michael spent 38 of his 40 years at IBM as a content pioneer – leading the design and development of advanced content management systems and technology that began at the very dawn of the structured content revolution in the early 80s. Dual trained as a content professional and systems engineer, he led the charge building some of the earliest content platforms based on structured content. If Michael hadn’t prevailed in a pitched internal battle to develop an XML platform over a planned SGML variant called WebDoc in the mid-90s at Big Blue, DITA, and the entire industry that supports it, might not presently exist. He was responsible for forming the XML team and a member of the workgroup at IBM that developed DITA.